GO vs Kotlin vs Java comparison

Go is a fairly common and popular language. It has easy syntax and good performance. Recently we have compared it with Kotlin and Java, and now we are sharing the results with you.

Introduction

Go is a fairly common and popular language. It has easy syntax and good performance. Recently we have compared it with Kotlin and Java, and now we are sharing the results with you.

We’ll use four backend applications for comparing:

- GO

- Kotlin

- Java(JPA)

- Java(JDBC)

Every application has a stateless authentication which is implemented on JWT tokens. Also, applications have a user service. The user service implements the following functionality:

type Users interface {

SignUp (ctx context.Context, user dto.SignUpDTO) error

VerifyUser (ctx context.Context, verifyDTO dto.verifyDTO) error

SignIn (ctx context.Context, user dto.SignInDTO) (auth.Token, error)

GetById (ctx context.Context, id UUID) (*dto.UserDTO, error)

GetAll (ctx context.Context) ([]dto.UserDTO, error)

GetByEmail (ctx context.Context, email string) (*dto.UserDTO, error)

}

Users interface (GO)

A new user is added to the application in two stages:

- /sign-up - new user registration;

- /verify - new user verification.

@PostMapping("/sign-up")

@ResponseStatus(HttpStatus.Created)

suspend fun signUp(@RequestBody signUpDTO: signUpDTO): Unit =

userService.signUp(signUpDTO)

@PostMapping("/verify")

suspend fun verifyUser(@RequestBody verifyDTO: VerifyDTO): Unit =

userService.verifyUser(verifyDTO)

Registration and verification endpoints (Kotlin)

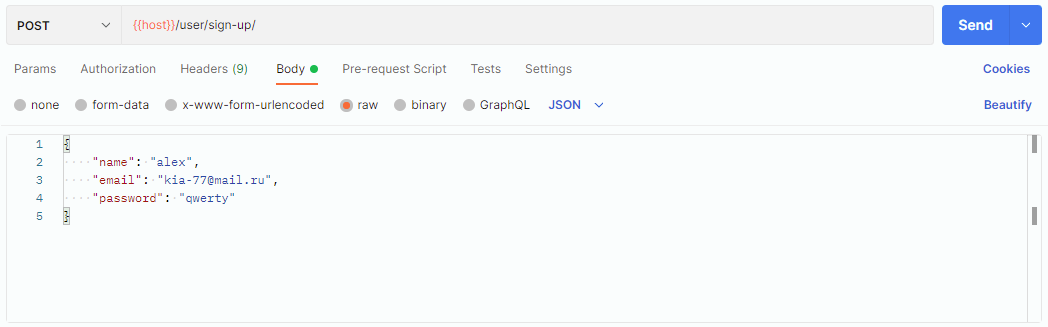

After registration, the new user is added to the database, but they are not active yet. To register a new user let's make a POST /sign-up request with this body:

New user registration (Postman)

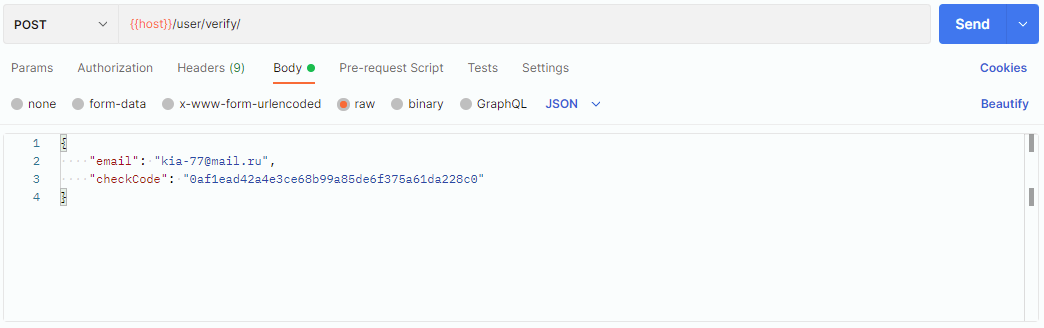

However, the new user is not active yet. To activate them, let's make a POST /verify request with the following body:

New user activation (Postman)

During the activation, the application tries to find a profile of an inactive user by email. If a user is found and loaded, and also the verification code is correct, then the user becomes active.

The user service implements the following endpoints:

@{...}

public TokenDTO signIn(@RequestBody SignInDTO signInDTO) { return userService.signIn(signInDTO); }

@{...}

public UserDTO getUserProfile() { return userService.getUserProfile(); }

@{...}

public UserDTO getUserByEmail(@PathVariable @NotBlank String email) { return userService.getByEmail(email); }

User service endpoints (Java)

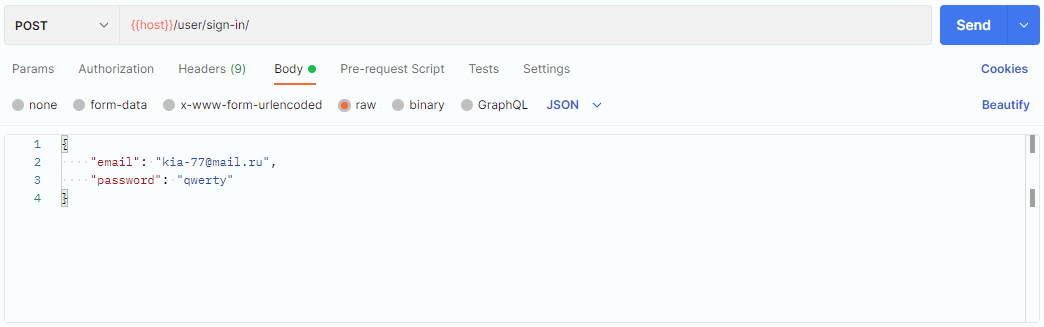

For authentication you need to make a POST /sign-in request with this body:

User authentication(Postman)

If a user is valid, it gets a JWT token. The profile of the authenticated user is added to in-memory cache. This cache is implemented on the application level. The cache size is 100,000. The user profile remains in cache for 4 hours. It’s the basic setup for all applications. Now let’s discuss the implementations of every application.

1.1 GO

In this application, we use GO 1.18. HTTP routing is implemented on the GIN WEB framework. A database for saving user profiles is Postgres. The application driver for the database is PGX. The size of connection pool is 25. Also, this application has an in-memory cache for saving user profiles.

func NewPostgresPool(cfg Config) (pool *pgxpool.Pool, err error) {

config, err := pgxpool.ParseConfig("")

if err != nil {

return nil, err

}

config.ConnConfig.Host = cfg.Host

config.ConnConfig.Port = uint16(cfg.Port)

config.ConnConfig.Database = cfg.DB

config.ConnConfig.User = cfg.User

config.ConnConfig.Password = cfg.Password

// MaxConns = 25

// MinConns = 2

// MaxConnLifetime = 120000 * time.Millisecond

// MaxConnIdleTime = 5 * time.Second

config.MaxConns = cfg.MaxConns

config.MinConns = cfg.MinConns

config.MaxConnLifetime = cfg.MaxConnLifetime

config.MaxConnIdleTime = cfg.MaxConnIdleTime

pool, err = pgxpool.ConnectConfig(context.Background(), config)

return

}

pgpool configuration GO

type GCache[K comparable, V any] struct {

cache gcache.Cache

}

func NewGCache[K comparable, V any](size int, expires time.Duration) *GCache[K, V] {

return &GCache[K, V]{

cache: gcache.New(size).Expiration(expires).LRU().Build(),

}

}

func (c *GCache[K, V]) Get(ctx context.Context, key K) (*V, error) {

value, err := c.cache.Get(key)

if err != nil {

return nil, err

}

return value.(*V), nil

}

func (c *GCache[K, V]) Set(ctx context.Context, key K, value *V) error {

return c.cache.Set(key, value)

}

In-memory cache implementation GO

1.2 Kotlin

This application uses Spring WEB FLUX. A database for saving users is Postgres. An async connection with DB is implemented on jasync-sql. Maximum active connections in the pool - 25. This application has a user cache. The Caffeine caching library is used to support caching. As we can see, the architecture and settings of this application are similar to the described above GO application. It's done intentionally to get as objective comparison results as possible.

// maxActiveConnections = 5

// maxConnectionsTtl = 120000

// maxIdleTime = 300

private val poolConfiguration = ConnectionPoolConfiguration(

maxActiveConnections = postgresProperty.maxActiveConnections,

maxConnectionsTtl = postgresProperty.maxConnectionsTtl,

maxIdleTime = postgresProperty.maxIdleTime

)

@Bean

fun pool(): SuspendingConnection =

ConnectionPool(

factory = PostgreSQLConnectionFactory(configuration),

configuration = poolConfiguration

).asSuspending

jasync-sql configuration Kotlin

class CacheConfig(

private val cacheProperty: CacheProperty

) {

@Bean

fun userCache(): AsyncCache<UUID, UserDTO> = Caffeine.newBuilder()

.maximumSize(cacheProperty.userMaximumSize)

.expireAfterAccess(cacheProperty.userExpiredTimeHours, TimeUnit.HOURS)

.buildAsync()

}

Async cache configuration (Kotlin)

1.3 Java(JPA)

The third application for comparison is written on Java 17. The main stack is Spring MVC, Spring Data, Spring Security. Postgres database stores user profiles. This application also uses Caffeine for caching users. The size of the connection pool is 25.

spring:

datasource:

driver-class-name: org.postgresql.Driver

hikari:

maximum-pool-size: 25

minimum-idle: 2

idle-timeout: 300000

max-lifetime: 120000

url: ${POSTGRES_URL}?currentSchema=${POSTGRES_SCHEMA:kbase}

username: ${POSTGRES_USER}

password: ${POSTGRES_PASSWORD}

Hikari pool configuration for database connection

@EnableCaching

@Configuration

public class CacheConfig {

@Bean

public Caffeine caffeineConfig() {

return Caffeine.newBuilder().expireAfterAccess(60, TimeUnit.MINUTES);

}

@Bean

public CacheManager cacheManager(Caffeine caffeine) {

CaffeineCacheManager caffeineCacheManager = new CaffeineCacheManager();

caffeineCacheManager.setCaffeine(caffeine);

return caffeineCacheManager;

}

}

Caffeine cache configuration (Java)

1.4 Java(JDBC)

The fourth application is similar to the third one, but it uses a JDBC template instead of JPA. In both Java applications, Tomcat server has a max threads number of 5000.

dubug: ${SPRING_DEBUG:false}

server:

port: ${APP_PORT:9000}

forward-headers-strategy: framework

tomcat:

threads:

max: 5000

Tomcat server configuration of Java applications

2 Load testing

We are going to use Gatling for load testing. This framework is written on Scala and uses Akka and Netty. Gatling has many unique features. It can create more than ten thousand concurrent virtual users and supports several testing models.

There are two fundamental approaches to load testing:

- Open workload model;

- Closed workload model.

The open workload model is a model where you control the arrival rate of users. And you can’t control the number of concurrent users. The number of active users in the system is the result of the application responses during the testing time.

Here we are going to define a moment when the number of concurrent active users becomes too large. At this moment our application will stop responding to any requests.

The closed workload model is a model where you control the concurrent number of users. And you can’t control the injection of new users per second.

We will load our application with a fixed amount of concurrent users. As a result, we will get an input queue.

Then we’ll define a maximum number of requests per second that our application can serve.

First, let’s generate 3,000,000 new users and save them to .json. It will be the first stage of our testing.

To determine the limit of our application, let’s use levels and ramps.

Level is the amount of injected users per amount of time.

Ramp regulates the velocity of users' injection.

Sometimes the described approach is called capacity load testing.

After a simulation, Gatling shows testing results that contain data and statistics, for example:

- Number of requests;

- Response time statistics;

- Active users statistics;

- Number of requests and responses during a simulation.

Furthermore, one of the most significant parts is a testing result analysis.

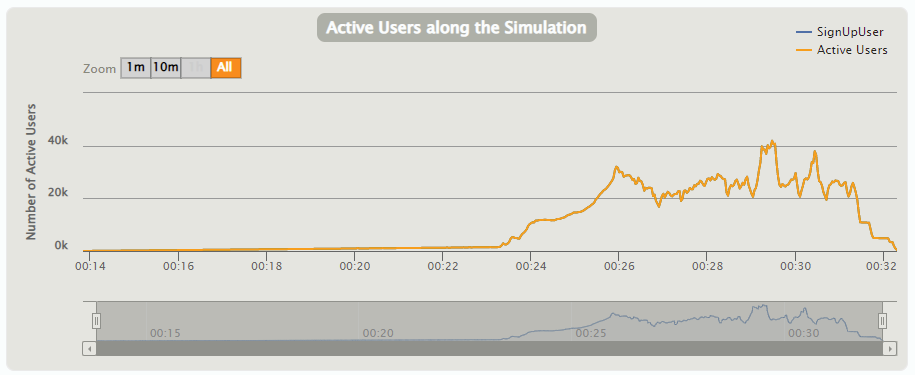

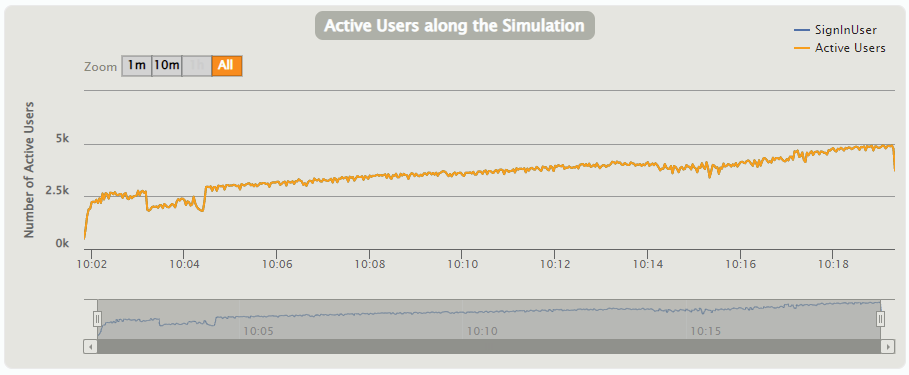

There is a histogram which is called Active Users along the Simulation. It’s a mixed metric that serves for both open and closed workload models and that represents “users who were active on the system under load at a given second”.

It’s computed as:

(number of alive users at previous second)

+ (number of users that were started during this second)

- (number of users that were terminated during previous second)

2.1 Open Workload Model

We’ve already talked about the open workload model. Registration and verification endpoints fit this model, so we are going to test them. The reason why we can't use registration endpoint is simple: we can’t control the number of concurrent users who are registering.

// usersPerSec = 25

// times = 105

// levelLasting = 5

// rampsLasting = 5

val openInjectionSteps: Seq[OpenInjectionStep] = Seq(

incrementUsersPerSec(usersPerSec)

.times(times)

.eachLevelLasting(levelLasting)

.separatedByRampsLasting(rampsLasting)

)

User injection in Open workload model

Every injection step lasts for 10 seconds. User injection increases for 5 seconds out of 10. We will append 25 new users per stage. The resulting number of stages will be 105. Therefore, the simulation time will be 17.5 minutes. Also, we will set up the limit of simulation as being 20 minutes. If some requests are still active after 20 minutes, Gatling would finish testing and show an error.

2.1.1 GO

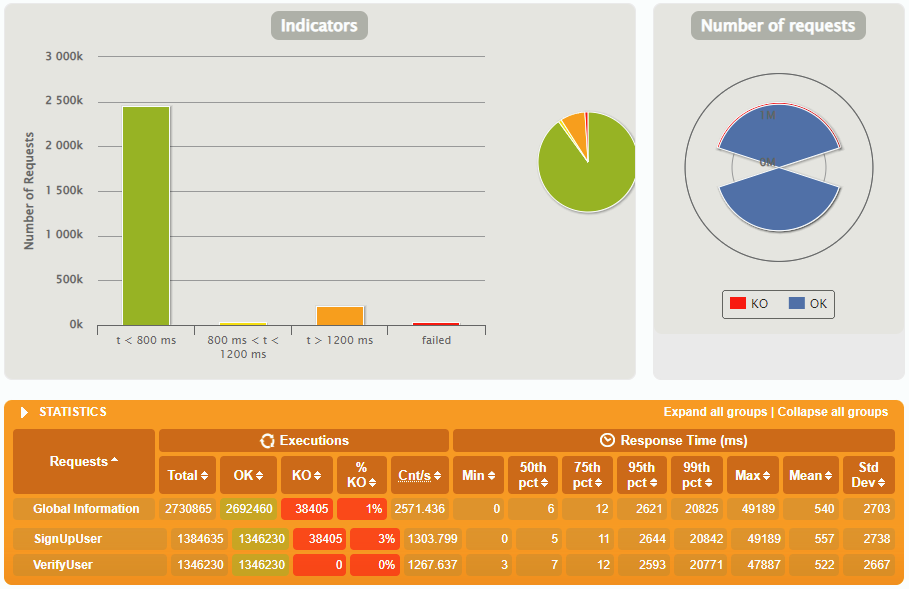

Testing results of GO application in open workload model

Active users along the Simulation of GO application in open workload model

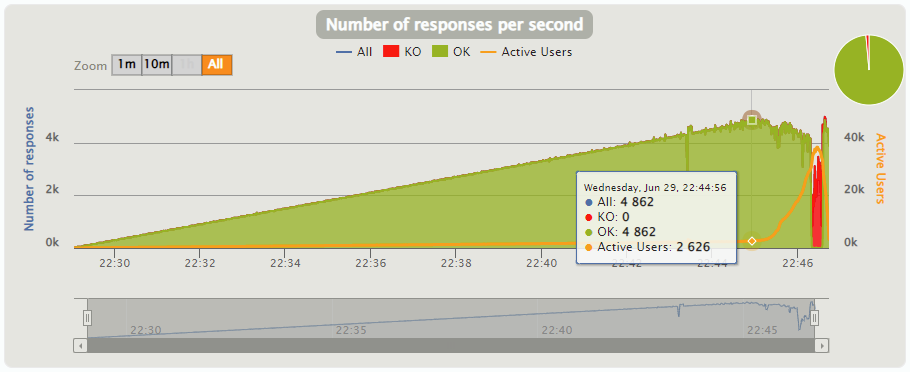

Number of responses per second of GO application in open workload model

2.1.2 Kotlin

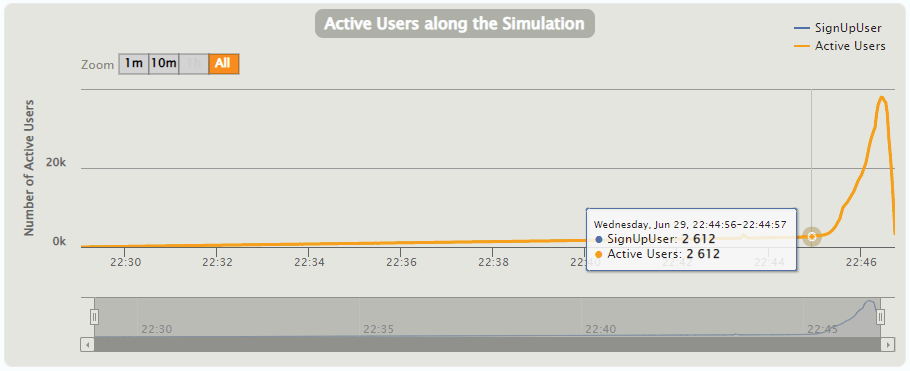

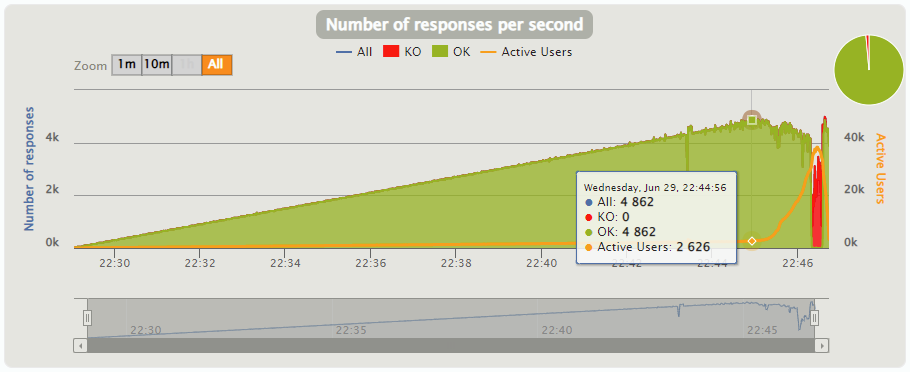

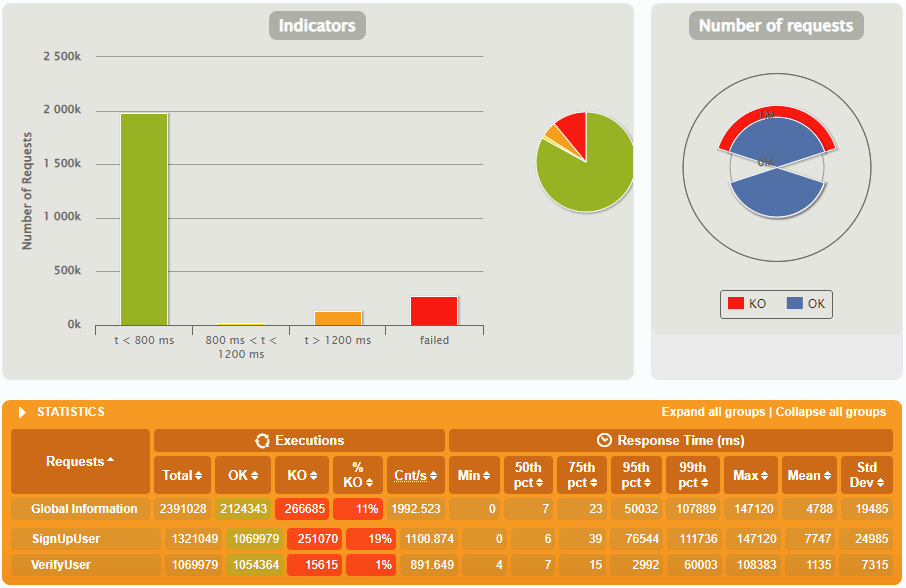

Testing results of Kotlin application in open workload model

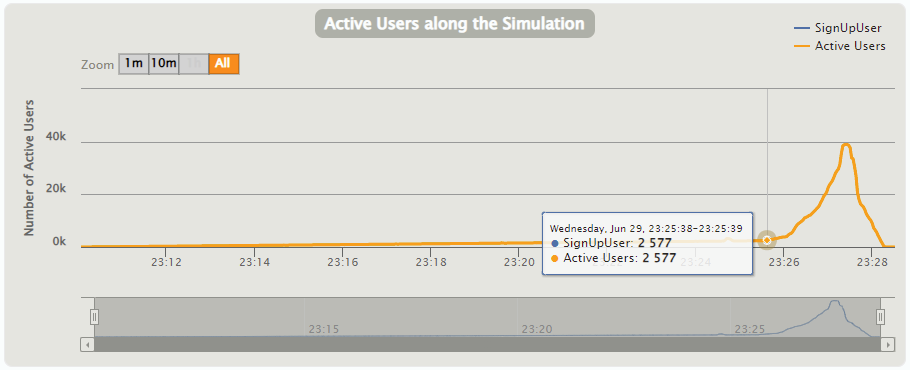

Active users along the Simulation of Kotlin application in open workload model

Number of responses per second of Kotlin application in open workload model

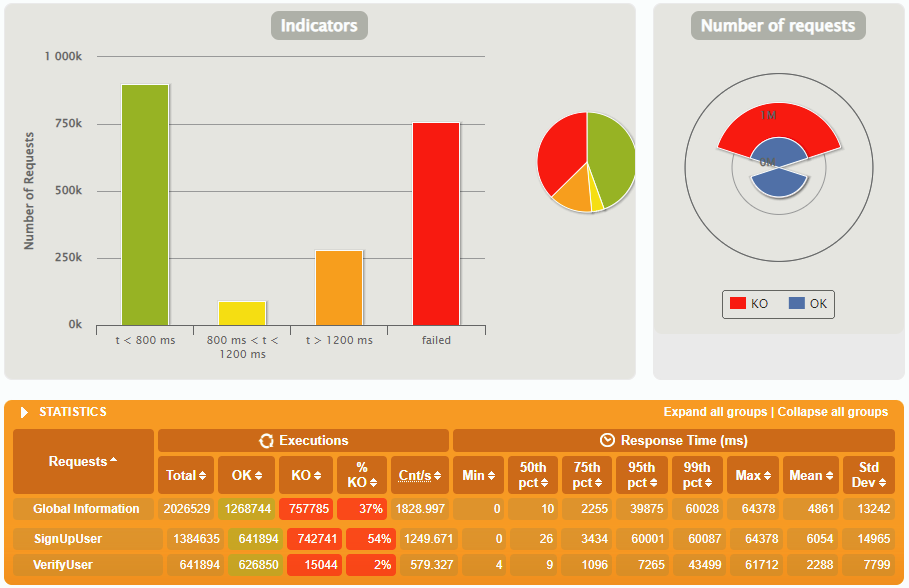

2.1.3 Java(JPA)

Testing results of Java(JPA) application in open workload model

Active Users along the Simulation of Java(JPA) application in open workload model

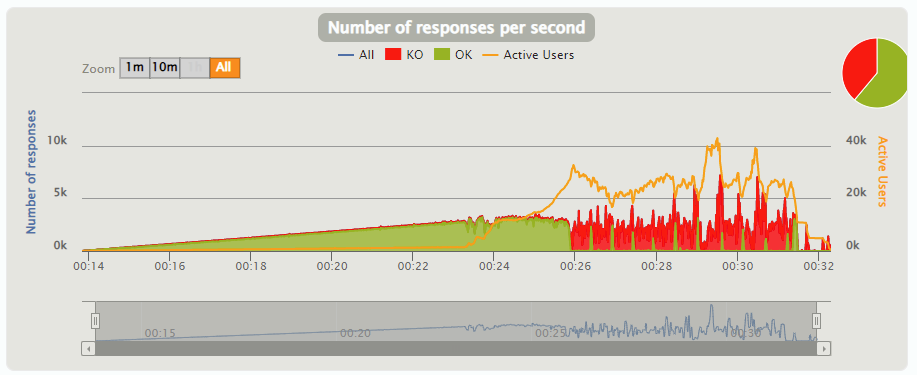

Number of responses per second of Java(JPA) application in open workload model

2.1.4 JAVA(JDBC)

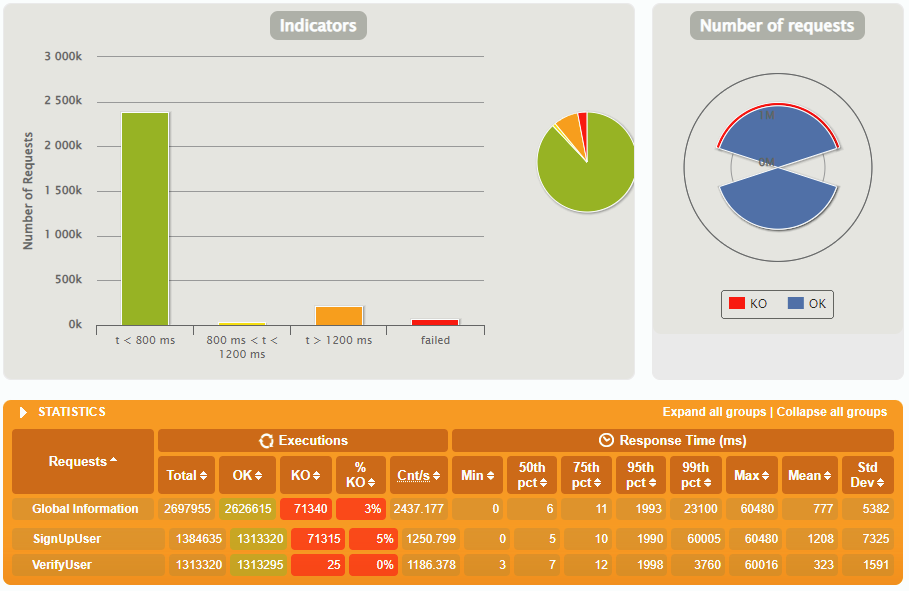

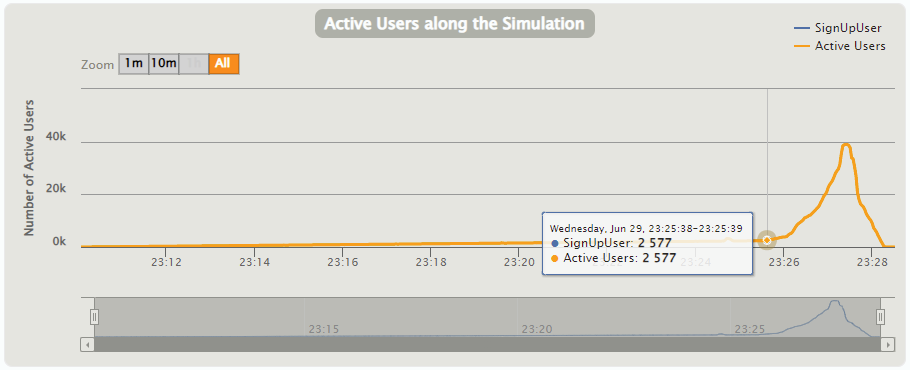

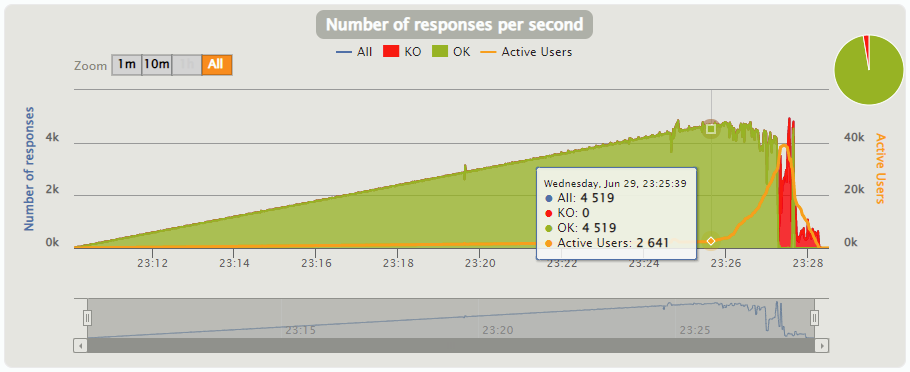

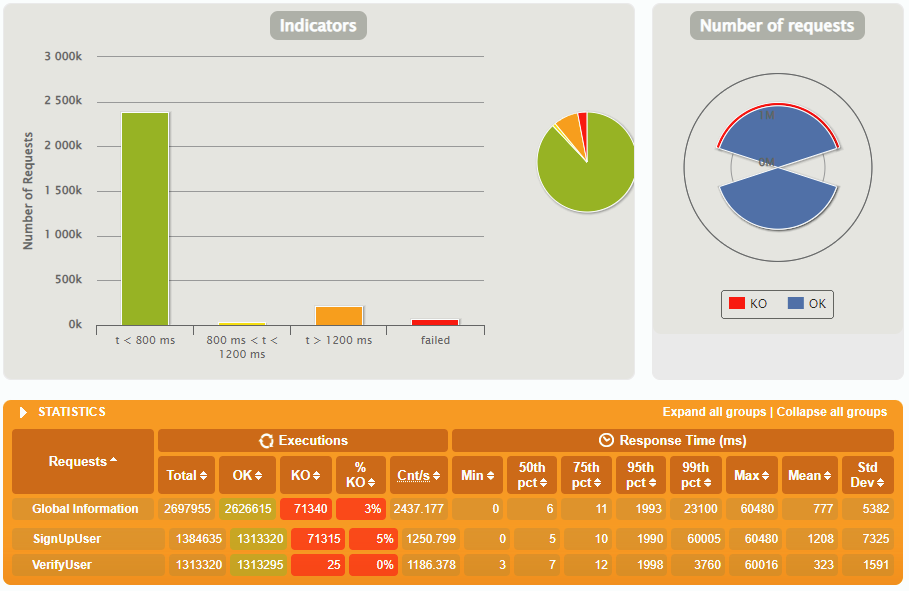

Testing results of Java(JDBC) application in open workload model

Active Users along the Simulation of Java(JDBC) application in open workload model

Number of responses per second of Java(JDBC) application in open workload model

Testing results in the open workload model

| Go | Kotlin | Java(JPA) | Java(JDBC) | |

| Simulation time in seconds | 1061 | 1200 | 1107 | 1107 |

| Total number of requests | 2730865 | 2391028 | 2026529 | 2697955 |

| Number of successfull requests | 2692460 | 2124343 | 1268744 | 2626615 |

| Percentage of failed requests | 1 | 11 | 37 | 3 |

Response time results in the open workload model:

| Go | Kotlin | Java(JPA) | Java(JDBC) | |

| 75 percentile (75th pct), ms | 12 | 119 | 2255 | 11 |

| 75 percentile (75th pct), ms | 2703 | 16134 | 13242 | 5382 |

| Mean Response Time (Mean), ms | 540 | 3960 | 64378 | 777 |

2.2 Closed Workload Model

So, let's switch to сlosed workload model. In this case, we are going to use other endpoints, that require authentication. Requests are made one after the other. Authentication → GetUserProfile → GetUserByEmail(exist user) → GetUserByEmail(non exist user) Every injection step lasts 10 seconds. User injection increases for 5 seconds out of 10. 25 new users are added during one stage. The simulation time will be 17.5 minutes.

2.2.1 Go

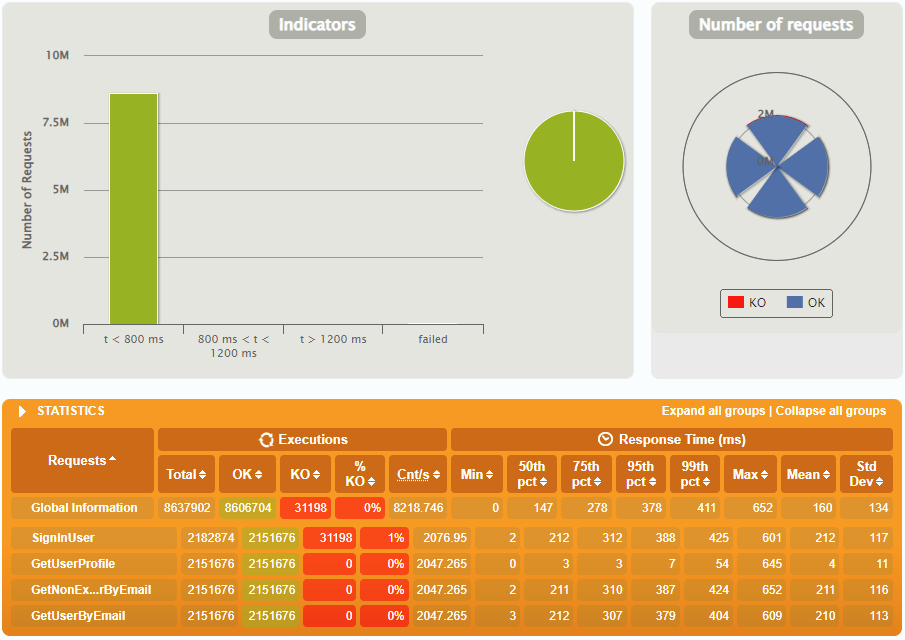

Testing results of GO application in closed workload model

Active Users along the Simultaion of GO application in closed workload model

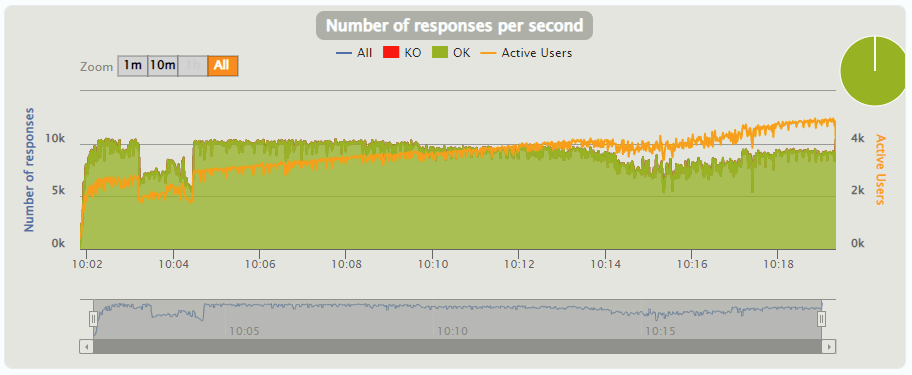

Number of responses per second of GO application in closed workload model

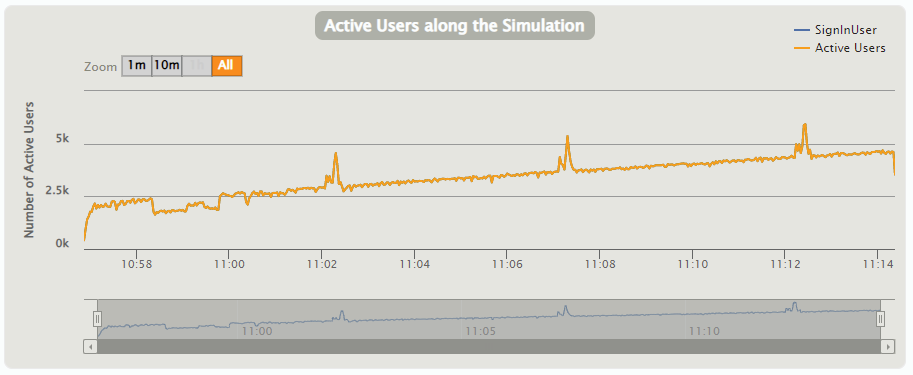

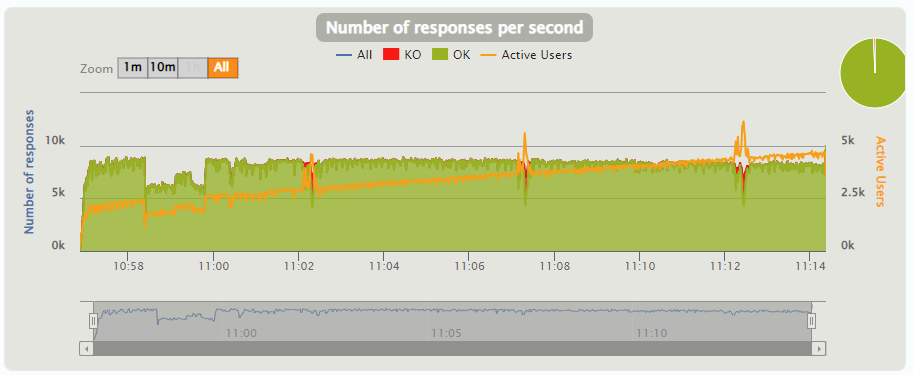

2.2.2 Kotlin

Testing results of Kotlin application in closed workload model

Active Users along the Simultaion of Kotlin application in closed workload model

Number of responses per second of Kotlin application in closed workload model

2.2.3 Java(JPA)

Testing results of Java(JPA) application in closed workload model

Active Users along the Simultaion of Java(JPA) application in closed workload model

Number of responses per second of Java(JPA) application in closed workload model

2.2.4 JAVA(JDBC)

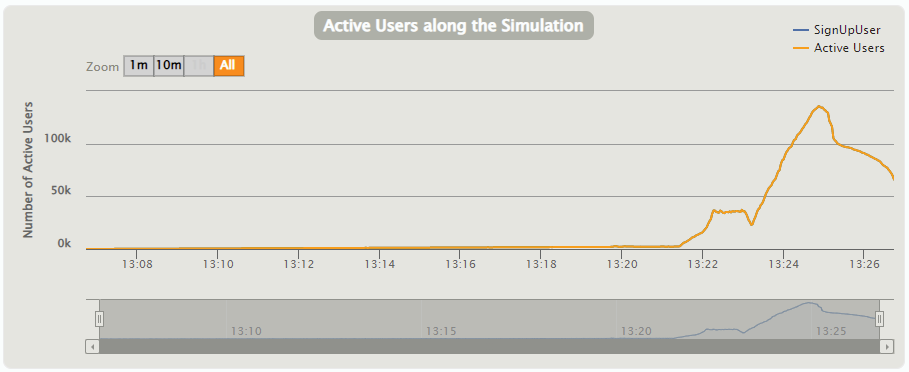

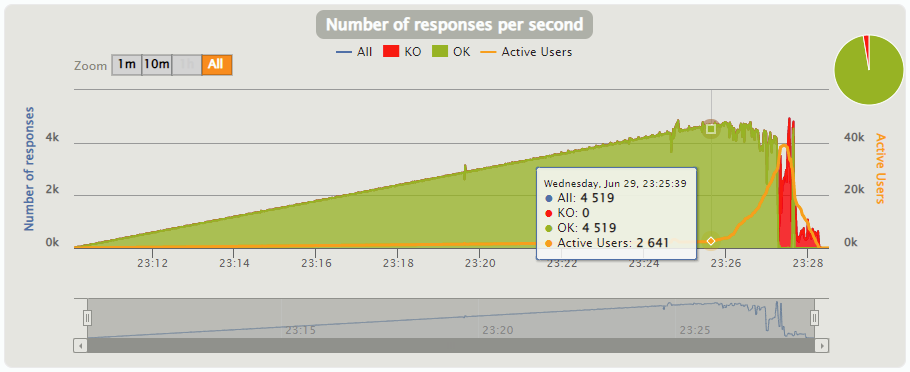

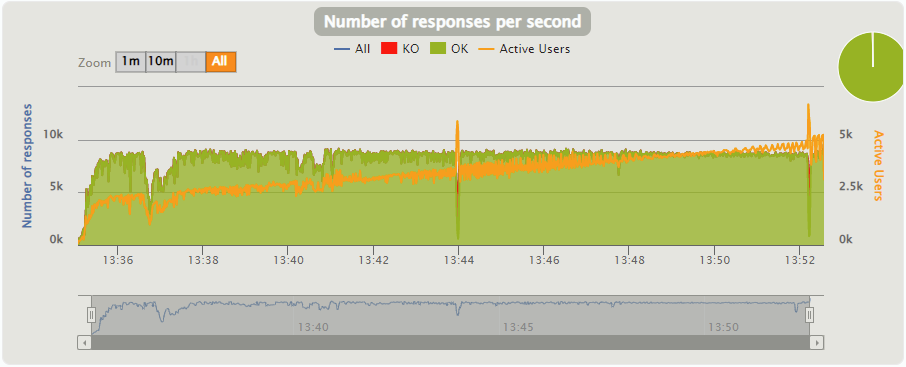

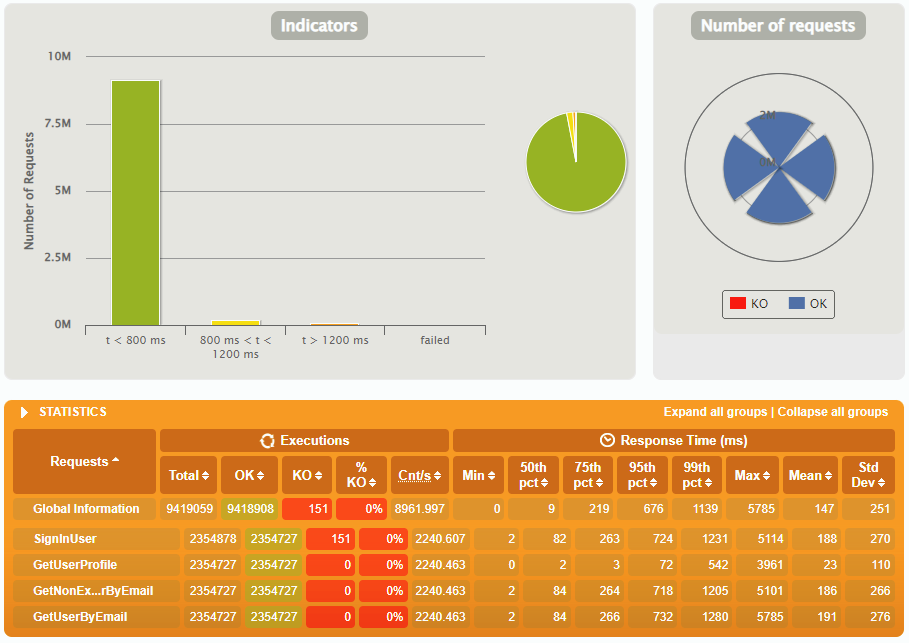

Testing results of Java(JDBC) application in closed workload model

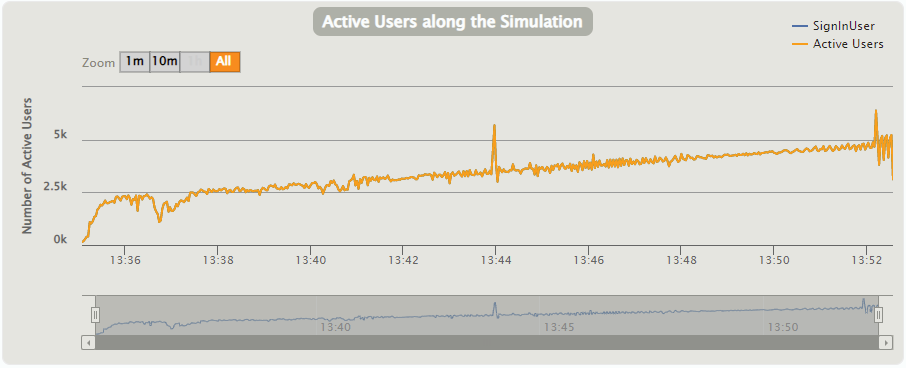

Active Users along the Simulation of Java(JDBC) application in closed workload model

Number of responses of Java(JDBC) application in closed workload model

Testing results in the closed workload model.

| Go | Kotlin | Java(JPA) | Java(JDBC) | |

| Simulation time in seconds | 1050 | 1050 | 1050 | 1050 |

| Total number of requests | 12087760 | 8637902 | 8469747 | 9419059 |

| Number of successfull requests | 12087760 | 8606704 | 8412140 | 9418908 |

| Percentage of failed requests | 0 | 0.0001 | 1 | 0.0001 |

Response time results in the open workload model.

| Go | Kotlin | Java(JPA) | Java(JDBC) | |

| 75 percentile (75th pct), ms | 196 | 278 | 251 | 219 |

| Mean Response Time (Mean), ms | 114 | 160 | 163 | 147 |

| Standard deviation (StdDev), ms | 147 | 134 | 274 | 251 |

3 Metrics

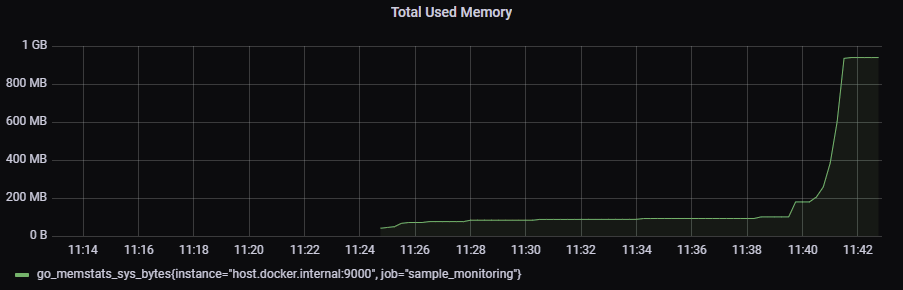

All applications for testing have a monitoring system - Prometheus. This is a well-known and reliable system. For visualizing metrics we use Grafana. Let’s compare our applications by CPU Usage and Total Memory. The Metrics will be recorded during the testing in the open workload model. We chose the open model here because the application will have the heaviest workload and hence consume the most resources.

3.1 GO

Total Memory of GO applicaiton

3.2 Kotlin

Total Memory of Kotlin application

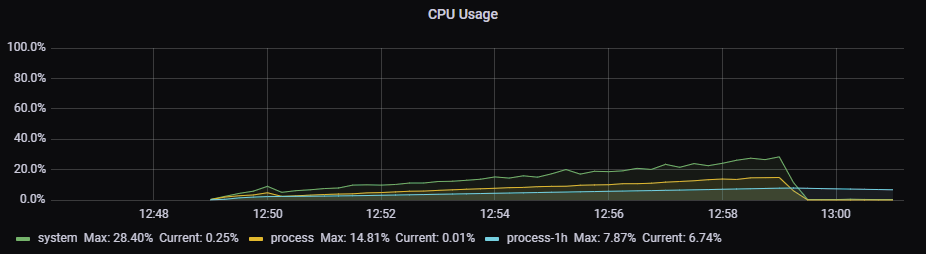

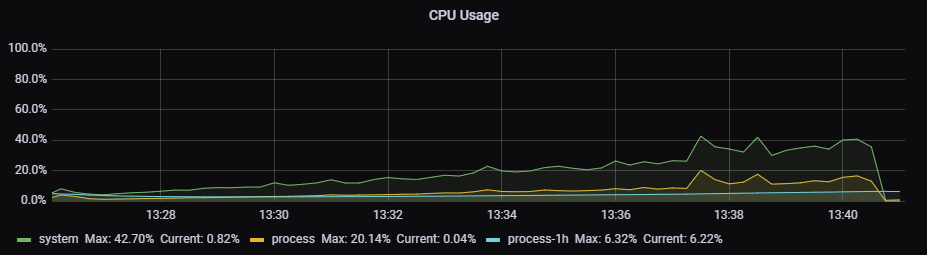

CPU Usage of Kotlin application

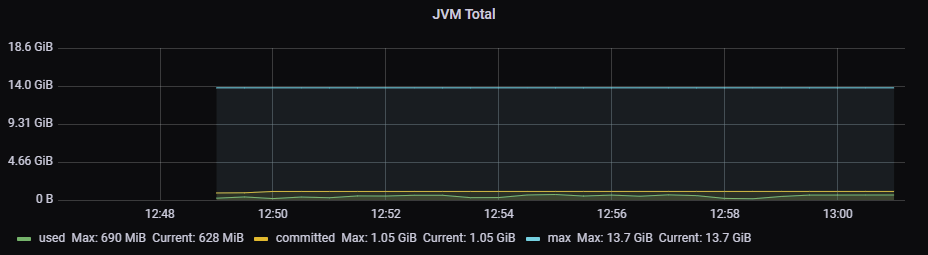

3.3 Java(JPA)

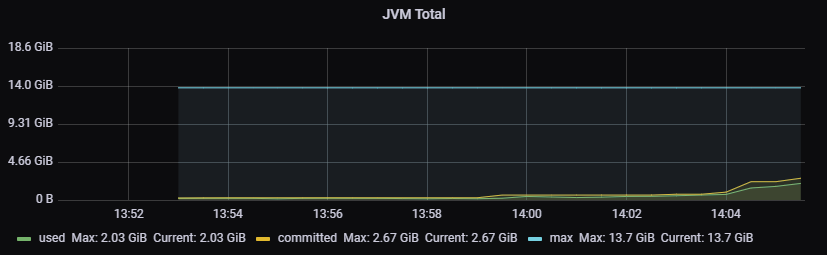

Total Memory of Java(JPA) application

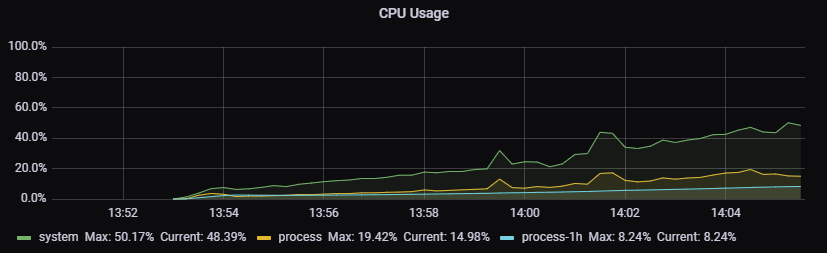

CPU Usage of Java(JPA) application

3.4 Java(JDBC)

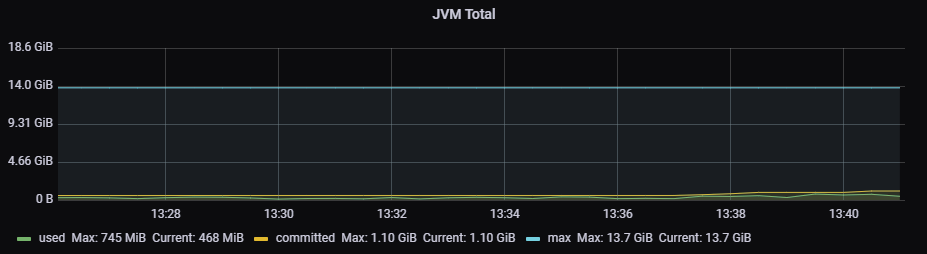

Total Memory of Java(JDBC) application

CPU Usage of Java(JDBC) application

3.5 Metrics result

| GO | Kotlin | Java(JPA) | Java(JDBC) | |

| CPU Usage, % | 28,4 | 50,1 | 42,7 | |

| Total Memory, BB | 0,95 | 1,05 | 2,67 | 1,1 |

4 A few notes about JPA results

Let's step aside from the main topic and review quickly surprising results for JAVA(JPA) application. First, let's take a look at Total Memory value for JAVA(JPA). The JAVA(JPA) application requires almost 2.5 times more memory than the other applications! Second, if we analyze JAVA(JPA) testing results like the number of successful responses, the percentage of failed responses, and the average response time which differ sometimes dramatically from the results of other applications. And last but not least, let's compare memory usage of JAVA(JPA) and JAVA(JDBC) during testing in open workload model. The difference between them is more than 2 times! Well, it is the price we must pay for convenience and the apparent simplicity of JPA.

Conclusion

In conclusion, let's compare all the results that we have during testing. We can definitely state that Go has some advantages for example the lowest memory consumption during testing in an open workload model. One of the reasons for that is that Go is a compiled language. During the testing time in the closed workload model, the Go application has shown good results. It testifies to the fact that a GO server can process a big amount of requests per second. Based on injection steps, all applications can serve 2625 users. The GO application has the best Mean Response Time, i.e. it can process the max number of requests per second in comparison with other languages. The second application by Mean Response Time is based on Java(JDBC). The third application is the Kotlin application, however, it has the lowest standard deviation value. It means that the Kotlin application responses to requests are the most stable. And the last one is the Java(JPA) application, which is absolutely predictable.