LLM & NLP-based term standardization cuts weeks of manual work

For a Forbes Global 2000 client, we have automated the manual process of matching adverse event descriptions from clinical reports with standardized MedDRA vocabulary. Faster and more accurate processing of clinical data means lower R&D costs, faster regulatory submissions, and, ultimately, better treatments for patients.

The challenge: complex terminology and slow manual decoding

The client was manually converting unstructured medical terms from clinical study records into standardized MedDRA (Medical Dictionary for Regulatory Activities) codes, a global regulatory standard for pharmacovigilance and drug safety reporting.

Medical terms can be composed of multiple words and must be converted into words defined by MedDRA. Manual mapping required qualified experts and could take up to two weeks per study, creating a bottleneck in the data processing pipeline. The company needed an automated, reliable way to decode the terms, and integrate it into their existing ETL flows.

The Solution: automated MedDRA standardization pipeline

To ensure a focused and viable implementation, we first conducted a short AI Readiness Workshop with the client’s technical and business teams. In just three hours, the workshop helped

- analyze the client’s existing data pipelines and constraints,

- and select the simplest and most effective integration approach.

As a result, instead of redesigning the entire workflow, we defined a lightweight solution: a new automated decoding step embedded directly into the client’s existing ETL process.

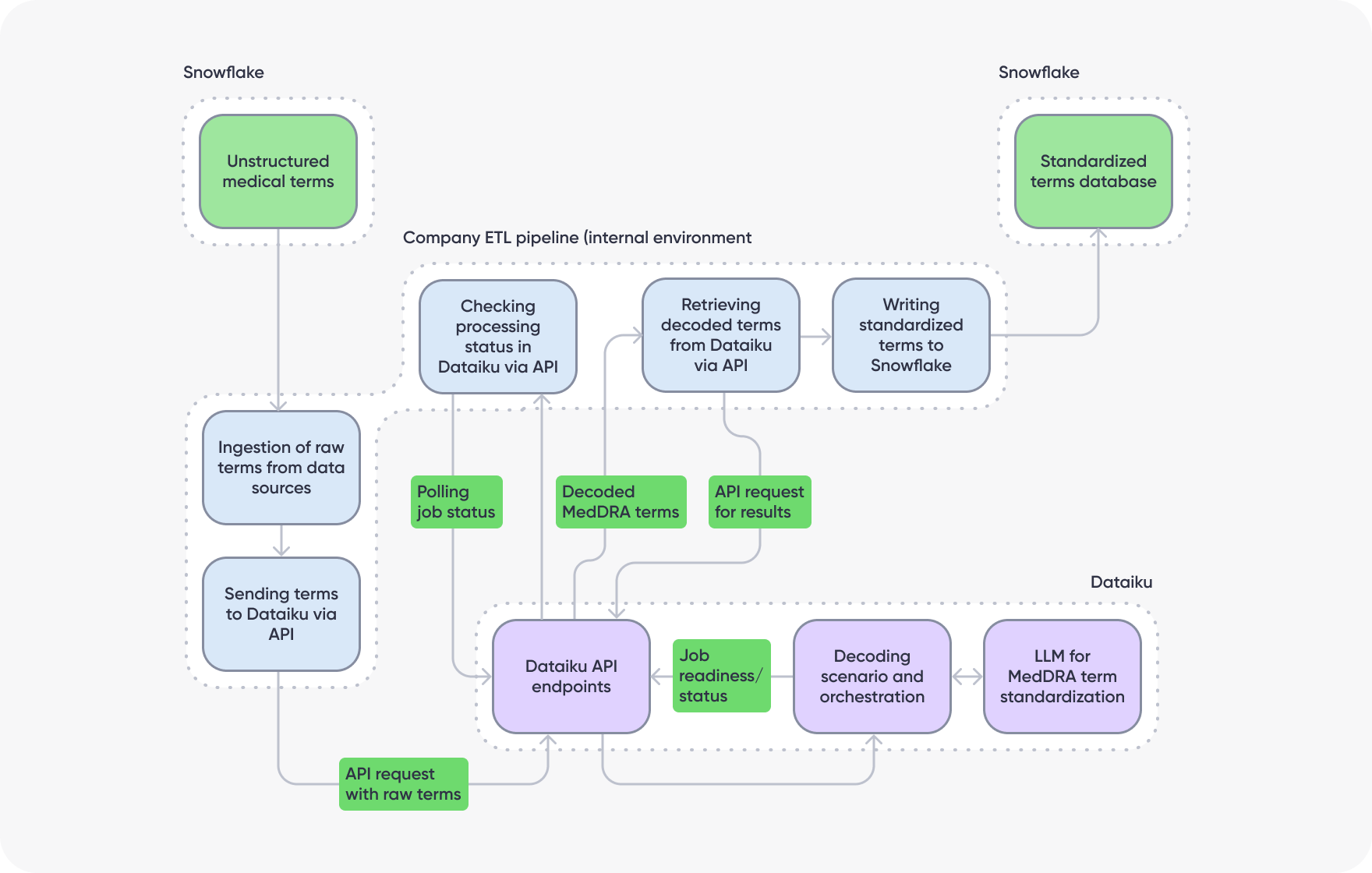

We designed and implemented an automated term decoding pipeline using a combination of Large Language Models (LLM) and Dataiku, and integrated it with the client's internal systems via API.

The core of the solution is an LLM-powered service, prompted and configured within Dataiku to accurately map free-text medical terms to the correct, standardized MedDRA codes. This new step was implemented as a reusable pipeline component that can be embedded into any existing data workflow.

Technical implementation

We used Dataiku for pipeline orchestration, LLM integration, and API management with intelligent term standardization performed by ChatGPT.

API-driven integration

The client’s existing ETL pipeline sends batches of new medical terms to the Dataiku application via REST API. This step was added to their workflows with a single line of code.

LLM-powered decoding

Inside the secure Dataiku environment, LLM processes the incoming terms and proposes MedDRA terms based on semantic meaning.

Multi-stage validation and hallucination control

To achieve near-100% accuracy, we implemented a dedicated verification step:

- All LLM-generated terms are automatically cross-checked against the official MedDRA dictionary. The system ensures that the proposed term actually exists in MedDRA before writing it to the results table.

- If no valid match is found, the data is not brought further in the pipeline. As a result, the system outputs only 100% accurate data — or no data at all.

If no valid MedDRA match is found, the term undecoded at this stage. It is automatically queued for the next scheduled pipeline run and reprocessed when the workflow executes again. If the term still cannot be decoded after repeated attempts, it is flagged and routed for manual expert review.

Before production rollout, the system was additionally validated by domain experts. At this stage, around 95% of terms were successfully decoded automatically, including terms that specialists previously failed to decode manually.

Reliable handshake and storage

The ETL process monitors job completion in Dataiku and retrieves validated results via API. The standardized data is then written directly into the client’s Snowflake data warehouse for downstream regulatory and analytical use.

Data security

The system processes only study identifiers and medical terminology (disease names, adverse event descriptions). It does not operate on personal patient data, neither shares this data outside the organization, ensuring compliance with internal security and privacy requirements.

Operational integration and results

The solution runs as a scheduled step within the client’s production pipelines:

- New study records (typically covering one day of gathered data) are processed every few hours.

- Night-time execution ensures that by the start of the business day, specialists receive fully decoded and standardized terms.

- Load is distributed across processing windows, ensuring stable system performance.

This approach turned term standardization into a fully automated background process instead of a manual expert task.

The project removed a critical manual bottleneck from the client’s pharmacovigilance data pipeline:

- Manual MedDRA decoding time reduced from up to two weeks to near real time.

- Near-100% accuracy in standardization, significantly reducing the risk of reporting errors.

- Faster processing cycles for critical pharmacovigilance data.

- Seamless integration with the existing IT landscape (ETL pipelines, Dataiku, Snowflake) without disrupting operations.

The client now has an automated, scalable, and trustworthy mechanism for medical term standardization — turning a slow expert-only task into a fully integrated data processing service that serves data every morning.

***

Previously, we Earlier, we described how we built a GenAI search platform for a major pharma company, turning days of manual research across scientific sources into a process that takes minutes. Read the full story here.